Tactile Reading Program “Sawaru Glyph”:

Overview of Principles, Effectiveness, Clinical Research, and Intellectual Property

Introduction

Sawaru Glyph is a tactile reading program that uses three-dimensional letters to promote learning through the combined actions of seeing, touching, and reading aloud. It was developed to improve reading and spelling abilities in individuals with developmental dyslexia. For clinical research conducted in Japan using this program, please refer to the study titled “Multisensory learning with haptic reading plates improved RAN(Rapid Automatized Naming)reading and writing skills “Original article in Japanese (日本語論文) | English translation (PDF)【1】.

I. Sawaru Glyph, Dyslexia, and Active Touch Perception

II. Principles and Structure of the Sawaru Glyph Learning System

III. Clinical Research on Tactile Reading-Based Learning (as of 2025)

IV. Technical Innovations and Intellectual Property: Patents, Utility Models, and Copyrights

V. Future Outlook and Potential Applications

I. Sawaru Glyph, Dyslexia, and Haptics

Sawaru Glyph is a tactile reading program that uses 3D-printed letters, allowing users to “see, touch, and read aloud” while engaging with accompanying video and audio content. With the included instruction manual and assessment sheets, it can also be used at home. This program utilizes the cognitive benefits of haptics (the sense of touch) to enhance four core literacy-related functions:

- Formation of letter-shape memory

- Strengthening of letter–sound associations

- Development of word-form (spelling pattern) memory

- Potential enhancement of Rapid Automatized Naming (RAN)

While tactile reading systems such as braille have long existed for individuals with visual impairments, no tactile reading method had previously been developed specifically for sighted individuals.

In 2020, Sawaru Glyph received the world’s first technology patent from the Japan Patent Office for a tactile learning method designed to help sighted individuals acquire knowledge of letter shapes and spelling【2】.

1. Developmental Dyslexia

.png)

One of the primary target users of Sawaru Glyph is individuals with developmental dyslexia. Dyslexia is a learning disorder characterized by significant difficulty with reading and spelling, despite normal vision, hearing, and intelligence【3】. People with dyslexia often struggle to decode the relationship between letters and sounds and have weaknesses in word recognition. As a result, reading becomes slow and effortful. They may have difficulty linking letters to sounds and recognizing words as whole units, which can lead to increased fatigue when reading. Spelling difficulties often include an inability to recall correct spellings even after repeated exposure. This is typically due to weak memory representations for letter shapes and word spellings. Neurologically, dyslexia is associated with individual differences in brain functions required for reading and writing【4】. Recent research has identified a range of cognitive vulnerabilities in dyslexia, including phonological processing, visual recognition, and rapid automatized naming (RAN)【5】. Sawaru Glyph was developed to address these vulnerabilities. By incorporating mechanisms of haptic learning, the program helps to compensate for the cognitive weaknesses associated with dyslexia.

2. The Role of Haptics

Multisensory learning approaches that incorporate haptics—such as clay modeling and writing letters in sand—have long been used around the world as educational interventions for developmental dyslexia 【6】. Recent studies suggest that the effectiveness of these methods may stem from multisensory memory formation processes that are facilitated by haptic input. Moreover, although still hypothetical, haptic-based learning may have unique benefits for improving Rapid Automatized Naming (RAN), a core cognitive function closely linked to reading ability.

Enhancement of Shape Memory Through Haptics

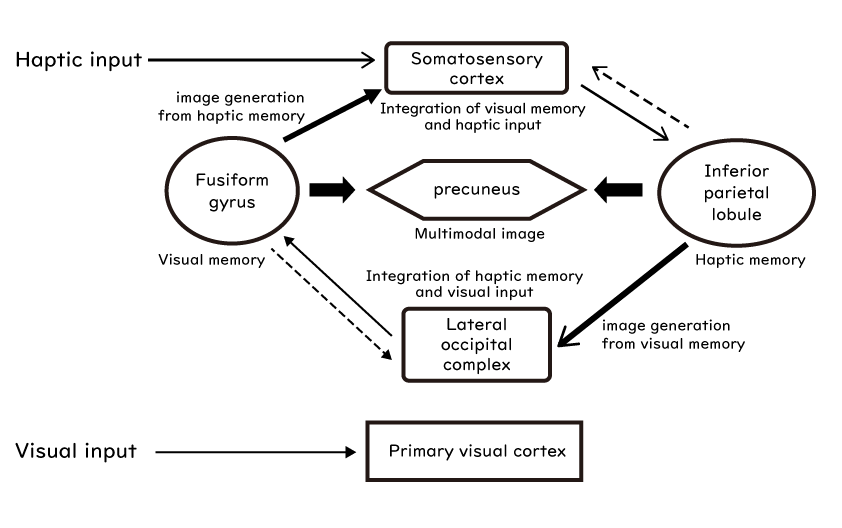

One of the primary effects of haptics is the enhancement of shape memory through tactile feedback. When a person actively touches and explores an object they have visually observed, their attention to that object increases, which in turn strengthens the memory representation of its shape and structural features. Another key effect is the integration of visual and tactile modalities. Recent fMRI studies 【7】have demonstrated that visual and tactile information are integrated through shared neural networks in the brain—specifically, from the lateral occipital complex (LOC) to the fusiform gyrus—resulting in accurate and concrete multimodal representations. This memory-enhancing effect was also confirmed in a haptic memory experiment I (Miyazaki) conducted in 2023 using three-dimensional geometric figures. In that study, titled “Visuo-Haptic Multisensory Learning Enhances Encoding and Recall of Rey-Osterrieth Complex Figures“ Original article in Japanese (日本語論文) | English translation (PDF), significant improvements in figure memory were observed as a result of haptic learning 【8】.

Binding of Visual and Phonological Representations

The second key function of haptics is its ability to facilitate the association between visual representations (spelling) and phonological representations (spoken sounds). A study conducted in France 【9】investigated how preschool children learn the relationship between word spellings and their corresponding pronunciations. The researchers found that incorporating tactile learning improved children’s performance on decoding tasks designed to assess their ability to link spellings with sounds. They concluded that haptics served as a mediating factor by integrating simultaneous visual input (spelling) with continuous phonological input (sound), thereby enhancing structural understanding of the relationship between letters and sounds. By allowing learners to physically explore the spelling with their hands while simultaneously perceiving the corresponding sound, the connection between graphemes and phonemes is reinforced. These findings suggest that Sawaru Glyph may be effective in supporting individuals with dyslexia by promoting visual–phonological integration and addressing their difficulties with decoding and encoding spelling and sound relationships.

II. Principles of Sawaru Glyph

Haptics has long been used in literacy instruction for individuals with dyslexia. However, traditional multisensory learning methods have typically been limited to letter-level activities—such as shaping letters out of clay or exploring letter blocks through touch. While these approaches can help develop memory for letter shapes, they often fall short in strengthening the association between letters and sounds or in forming whole-word memory, both of which are essential for fluent reading.

To overcome these limitations, Sawaru Glyph introduces a novel tactile reading program. In this program, learners engage in a structured process where they watch and listen to audiovisual content while simultaneously looking at and touching three-dimensional tactile plates—and reading aloud. Through this integrated approach, the program enhances the following cognitive functions necessary for reading and spelling:

Formation of letter-shape memory

Development of associative memory between letter shapes and sounds

Formation of word-form memory

Generalization at the sentence level

1. Phase One: Formation of Letter-Shape Memory and Integration of Letter–Sound Associations

In the first phase of learning, students engage in tactile reading of the 26 letters of the alphabet. While watching dedicated video and audio content, they visually observe and simultaneously touch three-dimensional letters with their fingers while reading aloud. Through this multisensory process, tactile feedback enhances the formation of robust memory for letter shapes and facilitates the integration of letter and sound associations.

2. Phase Two: Formation of Word-Form Memory

Next, learners read three-dimensionally printed word lists through tactile exploration. These word lists are structured according to phonics principles, progressing from simple spellings to more complex ones. By engaging in “see–touch–read aloud” learning, students develop chunked memory units based on spelling. This enables learners to mentally visualize clusters of letters as coherent units of spelling and sound, which contributes to the formation of word-form memory. Utilizing these internalized word forms supports greater reading fluency and improves spelling accuracy.

3. Phase Three: Generalization of Word-Form Memory

In the final phase, learners read a wider variety of words through tactile exploration to further generalize and reinforce the word-form memories established in Phase Two. Especially by reading image-rich sentences through “see–touch–read aloud” activities, semantic processing helps establish bypass pathways between the visual and phonological lexicons.

This process facilitates the automatic recall of phonological representations linked to the visual forms of spellings and words—an effect further detailed in the section on RAN facilitation mechanisms.

III. Clinical Study on Tactile Reading-Based Learning for Children with LD

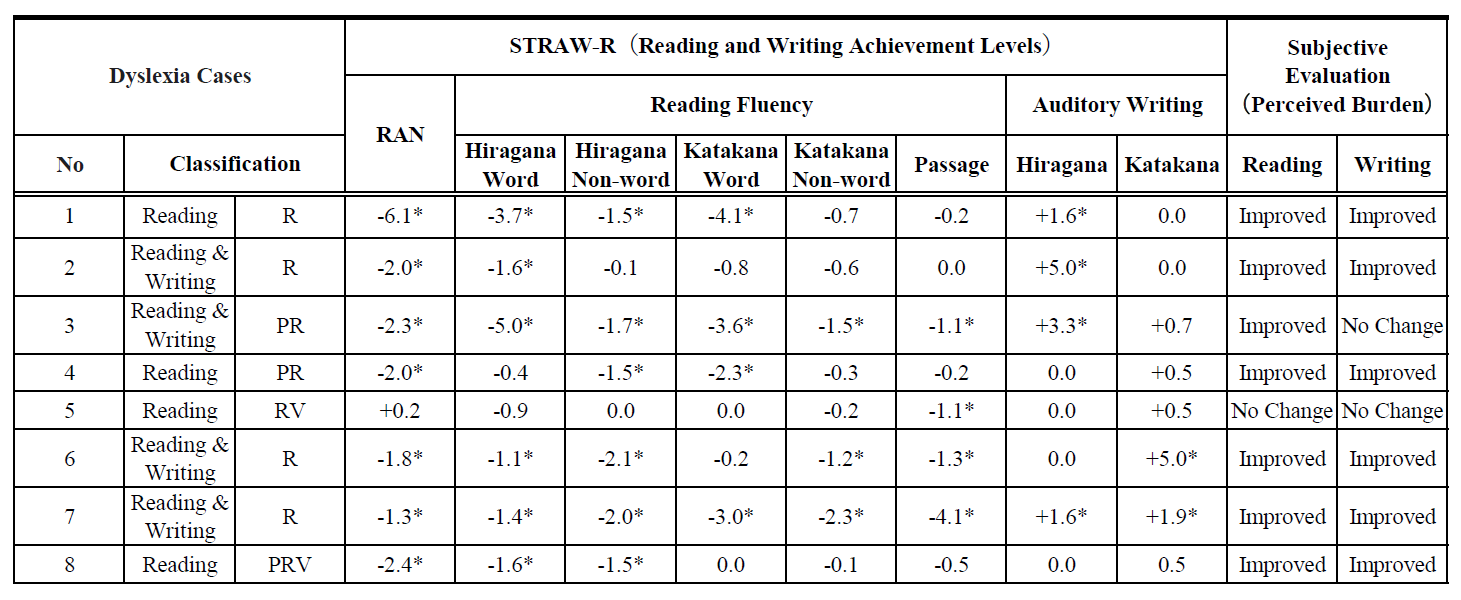

Between 2023 and 2024, I (Miyazaki) conducted a clinical study involving eight Dyslexia children with severe reading and writing learning disabilities (LD), who exhibited naming speed deficits and difficulties at the kana level (eight with reading difficulties, four with writing difficulties; average age: 10.4 years). Although the tactile reading training used in the study employed different material designs and text stimuli from those used in Sawaru Glyph, the letters were similarly three-dimensional in height and shape. To assess reading and writing ability, we used the Revised Standardized Reading and Writing Screening Test (STRAW-R)【13】, along with a custom-designed questionnaire to evaluate perceived burden related to reading and writing tasks.

Table 1. Changes in STRAW-R Scores (z-score differences) and Subjective Evaluations After Haptic Learning

(Table 1) Values showing a z-score improvement of 1.0 or higher are marked with an asterisk (*). For RAN and speeded reading tasks, improvement is indicated by a decrease in required time (–); for dictation tasks, improvement is indicated by an increase in the number of correct responses (+). Subjective evaluation: After completing the learning tasks, each child was asked whether they felt a reduction in the burden of reading and writing, with responses recorded separately for each domain.

1. Improvement in Reading Fluency and Reduction in Perceived Reading Burden

Among eight children with reading difficulties, seven showed a reduction in reading time of 1.5 z-score points or more on at least one of the five STRAW-R speeded reading tasks (hiragana words, katakana words, non-words, and sentences).When using a criterion of a 1.0 z-score difference, two or more tasks showed improved reading time in these same children.The most notable improvements were observed in the word and non-word tasks, which suggests that the stepwise learning process—progressing from kana (50 basic sounds) to special syllables, words, and then short sentences—facilitated the formation of letter-shape and word-form memory, enabling chunked word recognition.

Moreover, the observed fluency improvement even in meaningless non-words implies that the formation of associative memory between kana and sounds was also promoted. Children who showed improvement in the speeded reading tasks also reported, in the subjective questionnaire, that the burden of reading had decreased, indicating that objective performance gains aligned with their subjective experiences.

2. Improvement in Writing Recall and Reduction in Writing Burden

All four children with writing difficulties showed an increase in the number of correct responses exceeding a z-score difference of 1.5 on at least one of the two kana writing tasks in the STRAW-R. In addition, all four children demonstrated a reduction in the time required to begin writing. It is considered that touching and reading aloud from three-dimensional hiragana and katakana letters promoted the formation of concrete and detailed letter-shape memory for kana. Furthermore, through reading aloud accompanied by tactile input, the formation of associative memory between letter forms and sounds likely facilitated the encoding function needed to recall letter shapes from auditory input in writing tasks (“writing what was heard”). The shortened time between hearing and initiating writing is also thought to reflect an increase in retrieval efficiency from auditory cues to letter-shape memory. All four children reported a reduction in perceived writing burden in the subjective questionnaire. These self-reported reductions were consistent with the observed improvements in writing function.

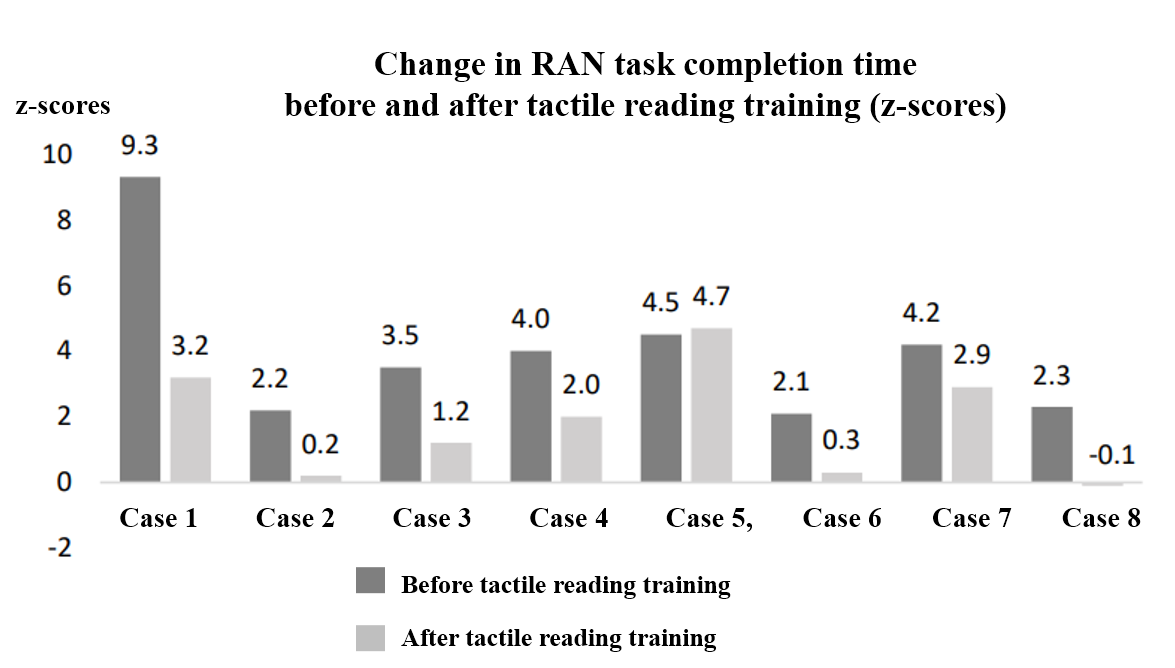

3. Facilitative Changes in Rapid Automatized Naming (RAN)

In this study, all eight participating children with dyslexia exhibited impairments in Rapid Automatized Naming (RAN).

Following the haptic tactile-based learning, seven out of the eight children showed a significant reduction in the average time required to complete the three RAN tasks included in the STRAW-R (see Figure 5).Specifically, six children improved by 1.5 z-score points or more, and one child improved by between 1.0 and 1.5 z-score points. The enhancement of RAN performance may be explained by two key effects of the haptic learning method: Improvement in the formation and recall of letter-shape memory Strengthening of the connection between visual letter forms and phonological representations (spoken words). These two effects likely worked together to promote RAN enhancement through “see–touch–read aloud” multisensory activities. In typical naming tasks, visual stimuli are processed through the semantic system, followed by retrieval of phonological information from the phonological lexicon. However, studies involving patients with aphasia have shown that visual letter cues can facilitate naming performance【14】.In the current study, the improvement in recalling letter forms through tactile input, combined with stronger connections between letters and sounds, likely enabled the visual and phonological lexicons to interact, allowing the recalled visual forms (“letter-shapes”) of pictures or numbers to act as internal cognitive cues, thereby supporting phonological retrieval. Furthermore, neurological studies have reported that connectivity between the left fusiform gyrus and areas responsible for semantic memory and phonological processing is strengthened during the development of naming ability【15】. The left fusiform gyrus plays a central role in representing both letter and word forms, and also in integrating visual, tactile, and auditory information. Thus, the multisensory reading process involving vision, touch, and sound may have contributed to changes in the connectivity of neural networks centered around the left fusiform gyrus. While the exact mechanisms behind RAN improvement remain unclear, future studies using functional brain imaging (e.g., fMRI) will be essential for further investigation.

4. Adaptability of Tactile Reading-Based Learning and Individual Differences

In this study, seven out of eight children demonstrated improvements in RAN and reading/writing abilities following haptic-based learning. However, one child did not show any such improvements, and no reduction in perceived burden was reported in the subjective questionnaire. This finding suggests that no single learning or training method is universally effective, and that the effectiveness of tactile-based learning may vary among individuals. I (Miyazaki) focused on performance in the Rey-Osterrieth Complex Figure Test (ROCFT), a tool commonly used to assess visual memory. Specifically, I examined changes in reproduction accuracy 3 minutes and 30 minutes after copying the figure. In ROCFT, it is known that a “reminiscence effect” often occurs, in which the 30-minute delayed reproduction outperforms the 3-minute one due to cognitive reorganization over time【16】.This effect may reflect characteristics of memory formation involving multisensory input, including tactile perception. Upon analysis, children whose 30-minute reproduction scores exceeded their 3-minute scores tended to respond well to tactile reading-based learning. In contrast, the one child who showed no improvement from haptic learning also experienced a decline in reproduction performance between the 3-minute and 30-minute intervals. These findings suggest that performance on ROCFT reproduction tasks may serve as a potential indicator of an individual’s adaptability to haptic-based learning. Moving forward, we aim to develop screening tasks that can more directly evaluate individual suitability for tactile-based learning methods.

V. Future Outlook

Looking ahead, we aim to build scientific evidence for Sawaru Glyph from two complementary perspectives: behavioral indicators and physiological indicators. In clinical research, we are planning to move beyond pilot studies and conduct both controlled comparative experiments and brain function studies using fMRI. Our goal is to establish a more scientifically grounded model not only for improving literacy skills but also for explaining the facilitative effects on Rapid Automatized Naming (RAN). One of the world’s oldest known writing systems, discovered near Baghdad in present-day Iraq (Figure 7), dates back approximately 5,500 years. These early symbols—carved into clay tablets to represent livestock and quantities—demonstrate that writing began with tactile interaction. Given that vision and hearing are thought to have evolved from tactile perception, it is likely that the act of touching enabled humans to link spoken language with physical form. I (Miyazaki) believe that the research behind Sawaru Glyph brings us closer to understanding the fundamental relationship between people and written language, and that this work can meaningfully support individuals who struggle with reading and writing.

Looking ahead, we aim to build scientific evidence for Sawaru Glyph from two complementary perspectives: behavioral indicators and physiological indicators. In clinical research, we are planning to move beyond pilot studies and conduct both controlled comparative experiments and brain function studies using fMRI. Our goal is to establish a more scientifically grounded model not only for improving literacy skills but also for explaining the facilitative effects on Rapid Automatized Naming (RAN). One of the world’s oldest known writing systems, discovered near Baghdad in present-day Iraq (Figure 7), dates back approximately 5,500 years. These early symbols—carved into clay tablets to represent livestock and quantities—demonstrate that writing began with tactile interaction. Given that vision and hearing are thought to have evolved from tactile perception, it is likely that the act of touching enabled humans to link spoken language with physical form. I (Miyazaki) believe that the research behind Sawaru Glyph brings us closer to understanding the fundamental relationship between people and written language, and that this work can meaningfully support individuals who struggle with reading and writing.

References

【1】Miyazaki, K., Hashimoto, Y., Uchiyama, H., & Sakai, M. (2025).Multisensory learning with haptic reading plates improved RAN(Rapid Automatized Naming reading and writing skills. Cognitive Neuroscience, 26(3–4). “Original article in Japanese (日本語論文) | English translation (PDF)

【2】Miyazaki, K. (2020). Spelling learning device and method. Japan Patent Office Publication No. P2020-187271A.

【3】Uno, A. (2016). Developmental dyslexia. Higher Brain Function Research, 36(2), 170–176.

【4】Seki, A. (2009). Functional imaging of developmental dyslexia in children. Cognitive Neuroscience, 11(1), 54–58.

【5】Uno, A., Sunohara, N., Kaneko, M., Awaya, N., Kozuka, J., & Goto, T. (2018). Cognitive deficits underlying developmental dyslexia: A comparison with age-matched controls. Higher Brain Function Research, 38(3), 267–271.

【6】Philip, A. P., & Cheong, L. S. (2011). Effects of the clay modeling program on the reading behavior of children with dyslexia: A Malaysian case study. The Asia-Pacific Education Researcher, 20(3), 456–468.

【7】Nishino, Y., & Ando, H. (2008). Neural mechanisms of object recognition based on 3D shape. Japanese Journal of Psychology Review, 51(2), 330–346.

【8】Miyazaki, K., Yamada, S., & Kawasaki, S. (2023). Enhancement of visual memory in Rey-Osterrieth Complex Figure Test through multisensory learning using vision and touch. Cognitive Neuroscience, 24(3–4), 87–92. Original article in Japanese (日本語論文) | English translation (PDF),

【9】Florence, B., Edouard, G., Pascale, C. L., & Sprenger, C. (2004). The visuo-haptic and haptic exploration of letters increases kindergarten children’s reading acquisition. Cognitive Development, 19(3), 433–449.

【10】Kaneko, M., Uno, A., Sunohara, N., & Awaya, N. (2012). Predictive power and limitations of pre-school RAN screening for reading difficulties after school entry. Brain and Development, 44(1), 29–34.

【11】Uno, A., Sunohara, N., Kaneko, M., Goto, T., Awaya, N., & Kozuka, J. (2015). Kana training using a bypass method for children with developmental dyslexia: A case series. Japanese Journal of Speech, Language and Hearing Research, 56(2), 171–179.

【12】Uno, A., Sunohara, N., Kaneko, M., & Wydell, T. N. (2017). STRAW-R: Revised Standardized Reading and Writing Screening Test – Assessment of Accuracy and Fluency. Interna Publishing: Tokyo.

【13】Wakamatsu, C., & Ishiai, S. (2018). Facilitative mechanism of initial kana-letter cues in naming tasks for aphasia patients with poor phonemic cueing. Japanese Journal of Neuropsychology, 34(4), 299–309.

【14】Arya, R., Ervin, B., Buroker, J., Greiner, H. M., Byars, A. W., Rozhkov, L., et al. (2022). Neuronal circuits supporting development of visual naming revealed by intracranial coherence modulations. Frontiers in Neuroscience, 16, 867021.

【15】Ogifu, Y., Kawasaki, S., Okumura, T., & Nakanishi, M. (2019). Developmental patterns and scale construction of the Rey-Osterrieth Complex Figure Test in childhood. Journal of Biomedical Fuzzy Systems, 21(1), 69–77.

【16】Miyazaki, K. (2023). Learning materials composed of convex shapes and sizes suitable for haptic learning. Japan Utility Model No. U3240844.

【17】NHK (2022, October 25). “Moji”: The Double-edged Sword That Captivated Humanity [Television broadcast].